On July 17, a new family of AI models, LLaMA 2 was announced by Meta. LLaMA 2 is trained on a mix of publicly available data. According to Meta LLaMA 2 performs significantly better than the previous generation of LLaMA models. Two flavors of the model: LLaMA 2 and LLaMA 2-Chat, a model fine tuned for two-way conversations, were released. Each flavor further has three versions with the parameters ranging from 7 billions to 70 billions. Meta is also freely releasing the code and data behind the model for researchers to build upon and improve the technology.

There are several ways to access LLaMA 2 for development work; you can download it from HuggingFace or access it via Microsoft Azure or Amazon SageMaker. For those interested in interacting with the LLaMA 2-Chat version, you can do so by visiting llama2.ai, a chatbot model demo hosted by the venture capitalist Andreessen Horowitz. This is the route I took to interact with LLaMA 2-Chat.

Since I was reading an excellent paper on symbolic regression, I decided to query LLaMA 2-Chat about this topic. Before I show my chat with the model, let me explain symbolic regression if you are not familiar with it. In the traditional linear regression, the model form, linear or polynomial etc., is assumed and the coefficients/parameters of the model are determined to get the best possible accuracy. In contrast, the symbolic regression involves searching a space of analytical expressions with the corresponding parameter values to best model a given dataset.

I started off by asking if LLaMA-2 Chat is better than GPT-4. I followed it up by asking about symbolic regression as shown below.

|

The answer provided was not specific. So I asked LLaMA 2 for a concrete example. This resulted in the conversation shown below.

|

Clearly, the example provided is that of linear regression and not of symbolic regression. Pointing this out to LLaMA 2 resulted in the following conversation, where again I had to point out that symbolic regression searching for different functions.

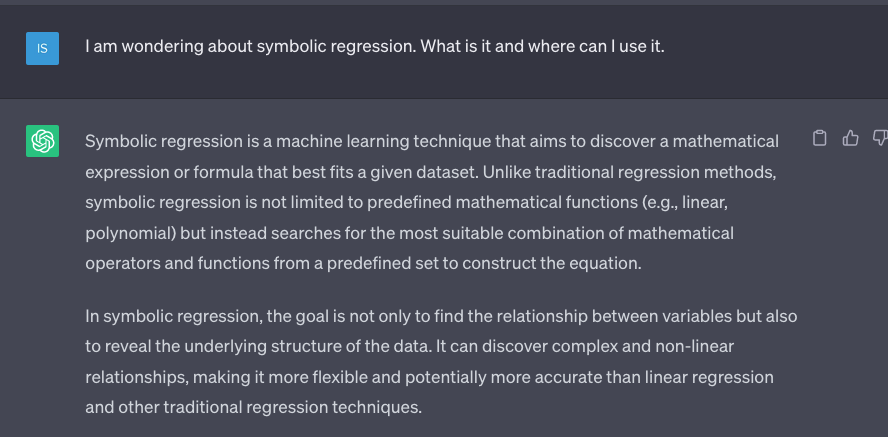

As you can see, LLaMA 2 had difficulty explaining symbolic regression and needed to be prompted for making mistakes. Next, I decided to go to ChatGPT to see what kind of response it would produce. Below is the ChatGPT output.

As you can see, ChatGPT was clear in explaining symbolic regression and even mentioned about the use of genetic algorithms and genetic programming that are key to symbolic regression.

So my take is to stick with Chat-GPT for getting help on topics of interest. LLaMA 2 is lacking in providing clear explanations. Of course, my take is based only on conversation about one topic only.